(Courtney Nash’s excellent post on this topic inadvertently pushed me to finally finish this – give it a read)

In the last post on this topic, I hoped to lay the foundation for what a mature role for automation might look like in web operations, and bring considerations to the decision-making process involved with considering automation as part of a design. Like Richard mentioned in his excellent comment to that post, this is essentially a very high level overview about the past 30 years of research into the effects, benefits, and ironies of automation.

I also hoped in that post to challenge people to investigate their assumptions about automation.

Namely:

- when will automation be appropriate,

- what problems could it help solve, and

- how should it be designed in order to augment and compliment (not simply replace) human adaptive and processing capacities.

The last point is what I’d like to explore further here. Dr. Cook also pointed out that I had skipped over entirely the concept of task allocation as an approach that didn’t end up as intended. I’m planning on exploring that a bit in this post.

But first: what is responsible for the impulse to automate that can grab us so strongly as engineers?

Is it simply the disgust we feel when we find (often in hindsight) a human-driven process that made a mistake (maybe one that contributed to an outage) that is presumed impossible for a machine to make?

It turns out that there are a number of automation ‘philosophies’, some of which you might recognize as familiar.

Philosophies and Approaches

One: The Left-Over Principle

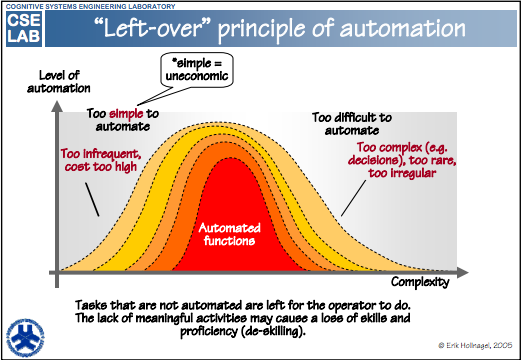

One common way to think of automation is to gather up all of the tasks, and sort them into things that can be automated, and things that can’t be. Even the godfather of Human Factors, Alphonse Chapanis said that it was reasonable to “mechanize everything that can be mechanized” (here). The main idea here is efficiency. Functions that cannot be assigned to machines are left for humans to carry out. This is known as the ‘Left-Over’ Principle.

David Woods and Erik Hollnagel has a response to this early incarnation of the “automate all the things!” approach, in Joint Cognitive Systems: Foundations of Cognitive Systems Engineering, which is (emphasis mine):

“The proviso of this argument is, however, that we should mechanise everything that can be mechanised, only in the sense that it can be guaranteed that the automation or mechanisation always will work correctly and not suddenly require operator intervention or support. Full automation should therefore be attempted only when it is possible to anticipate every possible condition and contingency. Such cases are unfortunately few and far between, and the available empirical evidence may lead to doubts whether they exist at all.

Without the proviso, the left-over principle implies a rather cavalier view of humans since it fails to include any explicit assumptions about their capabilities or limitations — other than the sanguine hope that the humans in the system are capable of doing what must be done. Implicitly this means that humans are treated as extremely flexible and powerful machines, which at any time far surpass what technological artefacts can do. Since the determination of what is left over reflects what technology cannot do rather than what people can do, the inevitable result is that humans are faced with two sets of tasks. One set comprises tasks that are either too infrequent or too expensive to automate. This will often include trivial tasks such as loading material onto a conveyor belt, sweeping the floor, or assembling products in small batches, i.e., tasks where the cost of automation is higher than the benefit. The other set comprises tasks that are too complex, too rare or too irregular to automate. This may include tasks that designers are unable to analyse or even imagine. Needless to say that may easily leave the human operator in an unenviable position.”

So to reiterate, the Left-Over Principle basically says that the things that are “left over” after automating as much as you can are either:

- Too “simple” to automate (economically, the benefit of automating isn’t worth the expense of automating it) because the operation is too infrequent, OR

- Too “difficult” to automate; the operation is too rare or irregular, and too complex to automate.

One critique of the Left-Over Principle is what Bainbridge points to in her second irony that I mentioned in the last post. The tasks that are “left over” after trying to automate all the things that can are the ones that you can’t figure out how to automate effectively (because they are too complicated or infrequent therefore not worth it) you then give back to the human to deal with.

So hold on: I thought we were trying to make humans lives easier, not more difficult?

Giving all of the easy bits to the machine and the difficult bits to the human also has a side affect of amplifying the workload on humans in terms of cognitive load and vigilance. (It turns out that it’s relatively trivial to write code that can do a boatload of complex things quite fast.) There’s usually little consideration given to whether or not the human could effectively perform these remaining non-automated tasks in a way that will benefit the overall system, including the automated tasks.

This approach also assumes that the tasks that are now automated can be done in isolation of the tasks that can’t be, which is almost never the case. When only humans are working on tasks, even with other humans, they can stride at their own rate individually or as a group. When humans and computers work together, the pace is set by the automated part, so the human needs to keep up with the computer. This underscores the importance automation in the context of humans and computers working jointly. Together. As a team, if you will.

We’ll revisit this idea later, but the idea that automation should place high priority and focus on the human-machine collaboration instead of their individual capacities is a main theme in the area of Joint Cognitive Systems, and one that I personally agree with.

Parasuraman, Sheridan, and Wickens (2000) had this to say about the Left-Over Principle (emphasis mine):

“This approach therefore defines the human operator’s roles and responsibilities in terms of the automation. Designers automate every subsystem that leads to an economic benefit for that subsystem and leave the operator to manage the rest. Technical capability or low cost are valid reasons for automation, given that there is no detrimental impact on human performance in the resulting whole system, but this is not always the case. The sum of subsystem optimizations does not typically lead to whole system optimization.”

Two: The “Compensatory” Principle

Another familiar approach (or justification) for automating processes rests on the idea that you should exploit the strengths of both humans and machines differently. The basic premise is: give the machines the tasks that they are good at, and the humans the things that they are good at.

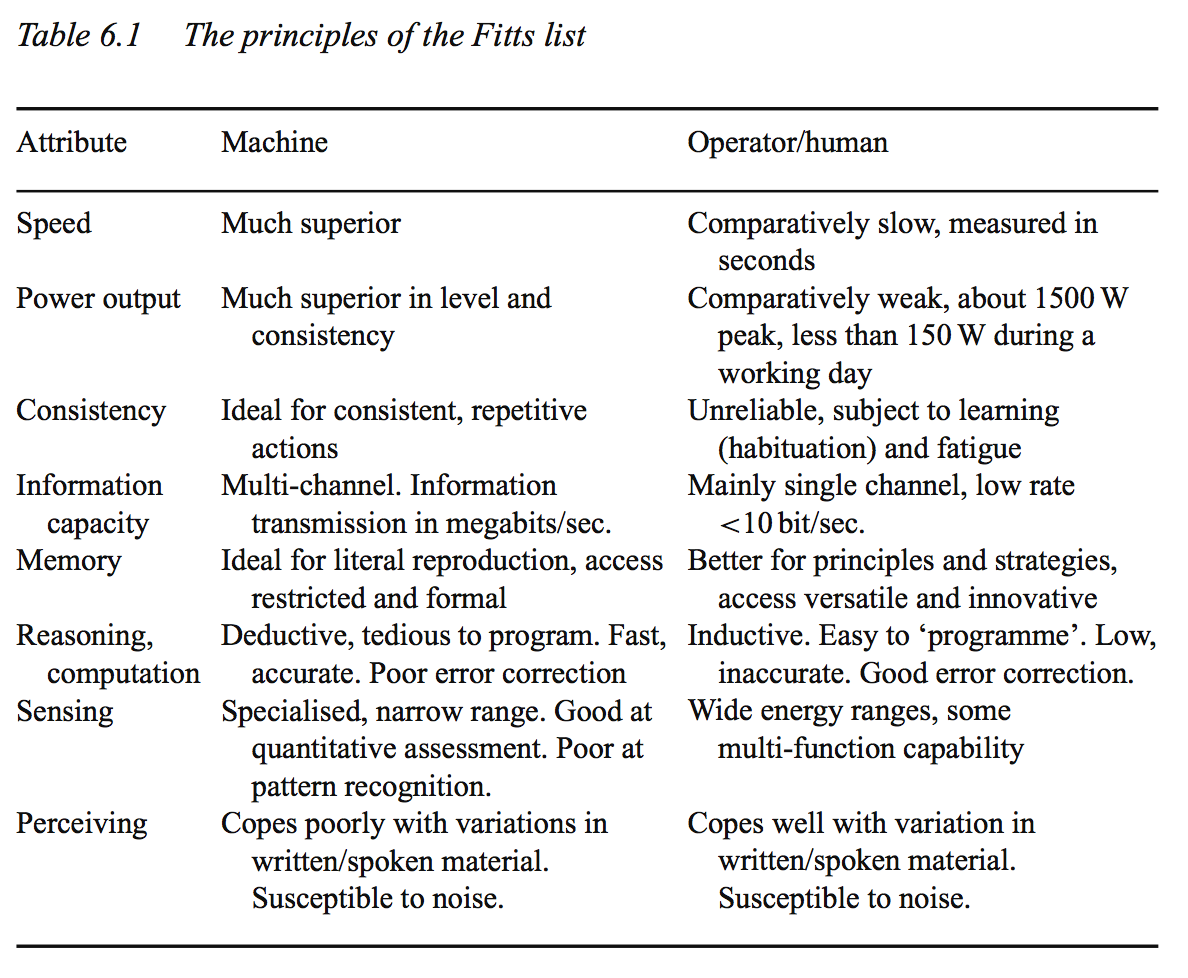

This is called the Compensatory Principle, based on the idea that humans and machines can compensate for each others’ weaknesses. It’s also known as functional allocation, task allocation, comparison allocation, or the MABA-MABA (“Men Are Better At-Machines Are Better At”) approach.

Historically, functional allocation has been most embodied by “Fitts’ List”, which comes from a report in 1951, “Human Engineering For An Effective Air Navigation and Traffic-Control System” written by Paul Fitts and others.

Fitts’ List, which is essentially the original MABA-MABA list, juxtaposes human with machine capabilities to be used as a guide in automation design to help decided who (humans or machine) does what.

Here is Fitts’ List:

Humans appear to surpass present-day machines with respect to the following:

- Ability to detect small amounts of visual or acoustic energy.

- Ability to perceive patterns of light or sound.

- Ability to improvise and use flexible procedures.

- Ability to store very large amounts of information for long periods and to recall relevant facts at the appropriate time.

- Ability to reason inductively.

- Ability to exercise judgment.

Modern-day machines (then, in the 1950s) appear to surpass humans with respect to the following:

- Ability to respond quickly to control signals and to apply great forces smoothly and precisely.

- Ability to perform repetitive, routine tasks

- Ability to store information briefly and then to erase it completely

- Ability to reason deductively, including computational ability

- Ability to handle highly complex operations, i.e., to do many different things at once

This approach is intuitive for a number of reasons. It at least recognizes that when it comes to a certain category of tasks, humans are much superior to computers and software.

Erik Hollnagel summarized the Fitts’ List in Human Factors for Engineers:

It does a good job of looking like a guide; it’s essentially an IF-THEN conditional on where to use automation.

So what’s not to like about this approach?

While this is a reasonable way to look at the situation, it does have some difficulties that have been explored which makes it basically impossible as a practical rationale.

Criticisms of the Compensatory Principle

There are a number of strong criticisms to this approach or argument for putting in place automation. One argument that I agree with most is that the work we do in engineering are never as decomposable as list would imply. You can’t simply say “I have a lot of data analysis to do over huge amounts of data, so I’ll let the computer do that part, because that’s what it’s good at. Then it can present me the results and I can make judgements over them.” for many (if not all) of the work we do.

The systems we build have enough complexity in them that we can’t simply put tasks into these boxes or categories, because then the cost of moving between them becomes extremely high. So high that the MABA-MABA approach, as it stands, is pretty useless as a design guide. The world we’ve built around ourselves simply doesn’t exist neatly into these buckets; we move dynamically between judging and processing and calculating and reasoning and filtering and improvising.

Hollnagel unpacks it more eloquently in Joint Cognitive Systems: Foundations of Cognitive Systems Engineering:

“The compensatory approach requires that the situation characteristics can be described adequately a priori, and that the variability of human (and technological) capabilities will be minimal and perfectly predictable.”

“Yet function allocation cannot be achieved simply by substituting human functions by technology, nor vice versa, because of fundamental differences between how humans and machines function and because functions depend on each other in ways that are more complex than a mechanical decomposition can account for. Since humans and machines do not merely interact but act together, automation design should be based on principles of coagency.”

David Woods refers to Norbert’s Contrast (from Norbert Weiner’s 1950 The Human Use of Human Beings)

Norbert’s Contrast

Artificial agents are literal minded and disconnected from the world, while human agents are context sensitive and have a stake in outcomes.

With this perspective, we can see how computers and humans aren’t necessarily decomposable into the work simply based on what they do well.

Maybe, just maybe: there’s hope in a third approach? If we were to imagine humans and machines as partners? How might we view the relationship between humans and computers through a different lens of cooperation?

That’s for the next post. 🙂

I think an answer to your closing question could benefit from user-centered design thinking and a focus on users’ jobs-to-be-done. System administrators are people, and users, and need to accomplish things in order to do their jobs. Digital services companies’ effectiveness depends in part on system administators’ effectiveness. Putting their needs and goals at the center of our design efforts can help us create truly beneficial and effective combinations of organizations, manual activities, and automated activities.

Great post John – really got me thinking about automation and inspired me to write one of my own:) http://bit.ly/16IzScG. Would love to hear your take on it.

“high coherence of process information, high process complexity and high process controllability (whether manual or by adequate automatics) were all associated with low levels of stress and workload and good health… High process controllability, good interface ergonomics and a rich pattern of activities were all associated with high feeling of achievement” – Bainbridge

I work with teams of both automators and operators. There is a current of mistrust running between them based on a history of inhumane assumptions and practices on both sides in the expression of their skills and intelligence. These teams are software engineers who must learn the language of automated deployment systems, systems engineers who monitor the deployment process and must make intercession decisions when things go wrong, and operations engineers who are responsible for the results of the automated deployment in production.

So I see this as a question of better cooperation between the humans who design and implement automaton systems and the humans who operate them? To put a very fine point on Jeff’s comment above; The machine’s are secondary to the problem at hand. It is necessary to consider the humanity of all participants in a given process (including the automation designers themselves) if we want our automated systems to be in any way humane. It would help considerably if the tools and processes used by automation designers help to reinforce this perspective, but the real help comes from authentic respect and care for the human at every step in the system.

There is a field of automation where it’s not a case of either principal, as I understand it. I’ve seen it across the hospital in computer systems that provide forms of documentation support. Not inside-the-support loop devices but decision aides.

I did a perfusion info system years ago and it did some nasty calculations that previously had to be estimated by rule of thumb. Values such as Vo2 — actually “v-dot-O2” but I can’t get the symbol there — which is the key measure of successful perfusion. Hideously difficult to mentally calculate due to being derived from so many other calculations (AVdO2, all the way down to BSA). Product also provided the legally mandated record of activity (both by the perfusionist and by capturing data from monitors and the heart-lung machine).

It was sold as a automation tool for replacing the perfusion chart but the configurable calculations made it far stronger as support automation. In beta trials, there was one instance where the perfusionist was going to do one thing on the basis of training but based on the newly available real-time calculations, decided she’d misdiagnosed the situation and the original corrective plan would’ve been patient adverse. She was right to change (later she called me in tears because, she told me, what about all those cases before then where she had come to the same decision point?)

Pingback: A Mature Role for Automation: Part I | Kitchen Soap

Was there a next post?

Good pair of articles. I read them with an eye toward test automation rather than WebOps automation, but I think the issues are similar. There are valid, useful, intermediate points between testing everything manually and a completely push-button system that spits out a single “Ship / Don’t Ship”.

In my experience, it is difficult to automate something perfectly in the first iteration. Getting it right means trying something, observing what happens, making corrections, and then repeating the process.

I don’t really approach this academically in such a way, but in a roundabout way, using a layman approach of Cynefin to build a similar body of knowledge about automation and knowledge of systems. Spoke about it at puppetcamp a few years ago:

https://www.slideshare.net/ByronMiller2/puppetcamp-dallas-6102014-enterprise-devops. . In fact i think a cynefin approach on top of what you describe would be a great way to build knowledge and understanding even further.

Pingback: Manual Work is a Bug: always be automating – TechBits

Pingback: Manual Work is a Bug: always be automating – Newbyo

Like!! Really appreciate you sharing this blog post. Really thank you! Keep writing.