(this is part 2 of a series: here is part 1)

One of the challenges of building and operating complex systems is that it’s difficult to talk about one facet or component of them without bleeding the conversation into other related concerns. That’s the funky thing about complex systems and systems thinking: components come together to behave in different (sometimes surprising) ways that they never would on their own, in isolation. Everything always connects to everything else, so it’s always tempting to see connections and want to include them in discussion. I suspect James Urquhart feels the same.

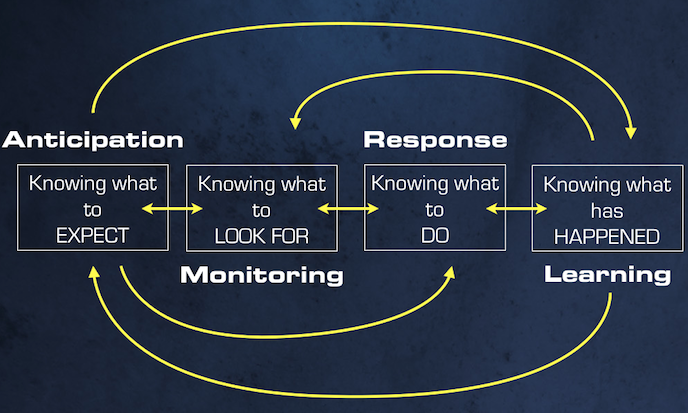

So one helpful bit (I’ve found) is Erik Hollnagel’s Four Cornerstones of Resilience. I’ve been using it as a lens with which which to discuss and organize my thoughts on…well, everything. I think I’ve included them in every talk, presentation, or maybe even every conversation I’ve had with anyone for the last year and a half. I’m planning on annoying every audience for the foreseeable future by including them, because I can’t seem to shake how helpful they are as a lens into viewing resilience as a concept.

The greatest part about the four cornerstones is that it’s a simplification device for discussion. And simplifications don’t come easily when talking about complex systems. The other bit that I like is that it makes it straightforward to see relationships in activities and challenges in each of them, as they relate to each other.

For example: learning is traditionally punctuated by Post-Mortems, and what (hopefully) come out of PMs? Remediation items. Tasks and facts that can aid:

- monitoring, (example: “we need to adjust an alerting threshold or mechanism to be more appropriate for detecting anomalies”)

- anticipation, (example: “we didn’t see this particular failure scenario coming before, so let’s update our knowledge on how it came about.”)

- response (example: “we weren’t able to troubleshoot this issue as quickly as we’d like because communicating during the outage was noisy/difficult, so let’s fix that.”)

I’ll leave it as an exercise to imagine how anticipation can then affect monitoring, response, and learning. The point here is that each of the four cornerstones can effect each other in all sorts of ways, and those relationships ought to be explored.

I do think it’s helpful when looking at these pieces to understand that they can exist on a large time window as well as a small one. You might be tempted to view them in the context of infrastructure attributes in outage scenarios; this would be a mistake, because it narrows the perspective to what you can immediately influence. Instead, I think there’s a lot of value in looking at the cornerstones as a lens on the larger picture.

Of course going into each one of these in detail isn’t going to happen in a single epic too-long blog post, but I thought I’d mention a couple of things that I currently think of when I’ve got this perspective in mind.

Anticipation

This is knowing what to expect, and dealing with the potential for fundamental surprises in systems. This involves what Westrum and Adamski called “Requisite Imagination”, the ability to explore the possibilities of failure (and success!) in a given system. The process of imagining scenarios in the future is a worthwhile one, and I certainly think a fun one. The skill of anticipation is one area where engineers can illustrate just how creative they are. Cheers to the engineers who can envision multiple futures and sort them based on likelihood. Drinks all around to those engineers who can take that further and explain the rationale for their sorted likelihood ratings. Whereas monitoring deals in the now, anticipation deals in the future.

At Etsy we have a couple of tools that help with anticipation:

- Architectural Reviews These are meetings open to all of engineering that we have when there’s a new pattern being used or a new type of technology being introduced, and why. We gather up people proposing the ideas, and then spend time shooting holes into it with the goal of making the solution stronger than it might have been on its own. We’d also entertain what we’d do if things didn’t go according to plan with the idea. We take adopting new technologies very seriously, so this doesn’t happen very often.

- Go or No-Go Meetings (a.k.a. Operability Reviews) These are where we gather up representative folks (at least someone from Support, Community, Product, and obviously Engineering) to discuss some fundamentals on a public-facing change, and walk through any contingencies that might need to happen. Trick is – in order to get contingencies as part of the discussion, you have to name the circumstances where they’d come up.

- GameDay Exercises These are exercises where we validate our confidence in production by causing as many horrible things we can to components while they’re in production. Even asking if a GameDay is possible sparks enough conversation to be useful, and burning pieces to the ground to see how it behaves when it does is always a useful task. We want no unique snowflakes, so being able to stand it up as fast as it can burn down is fun for the whole family.

But anticipation isn’t just about thinking along the lines of “what could possibly go wrong?” (although that is always a decent start). It’s also about the organization, and how a team behaves when interacting with the machines. Recognizing when your adaptive capacity is failing is key to anticipation. David Woods has collected some patterns of anticipation worth exploring, many of which relate to a system’s adaptive capacity:

- Recognize when adaptive capacity is failing – Example: Can you detect when your team’s ability to respond to outages degrades?

- Recognizing the threat of exhausting buffers or reserves – Example: Can you tell when your tolerances for faults are breached? When your team’s workload prevents proactive planning from getting done?

- Recognize when to shift priorities across goal trade-offs – Example: Can you tell when you’re going to have to switch from greenfield development, and focus on cleaning up legacy infra?

- Able to make perspective shifts and contrast diverse perspectives that go beyond their nominal position – Example: Can Operations understand the goals of Development, and vice-versa, and support them in the future?

- Able to navigate interdependencies across roles, activities, and levels – Example: Can you foresee what’s going to be needed from different groups (Finance, Support, Facilities, Development, Ops, Product, etc.) and who in those teams need to be kept up-to-date with ongoing events?

- Recognize the need to learn new ways to adapt – Example: Will you know when it’s time to include new items in training incoming engineers, as failure scenarios and ways of working change in the organization and infrastructure?

I’m fascinated by the skill of anticipation, frankly. I spoke at Velocity Europe in Berlin last year on the topic.

Monitoring

This is knowing what to look for, and dealing with the critical in systems. Not just the mechanics of servers and networks and applications, but monitoring in the organizational sense. Anomaly detection and metrics collection and alerting are obviously part of this, and should be familiar to anyone expecting their web application to be operable.

But in addition to this, we’re talking as well about meta-metrics on the operations and activities of both infrastructure and staff.

Things like:

- How might an team measure its cognitive load during an outage, in order to detect when it is drifting?

- Are there any gaps that appear in a team’s coordinative or collaborative abilities, over time?

- Can the organization detect when there are goal conflicts (example: accelerating production schedules in the face of creeping scope) quickly enough to make them explicit and do something about them?

- What leading or lagging indicators could you use to gauge whether or not the performance demand of a team is beyond what could be deemed “normal” for the size and scale it has?

- How might you tell if a team is becoming complacent with respect to safety, when incidents decrease? (“We’re fine! Look, we haven’t had an outage for months!”)

- How can you confirm that engineers are being ramped up adequately to being productive and adaptive in a team?

Response

This is knowing what to do, and dealing with the actual in systems. Whether you’ve anticipated a perturbation or disturbance, as long as you can detect it, than you have something to respond to. How do you? Page the on-call engineer? Are you the on-call engineer? Response is fundamental to working in web operations, and differential diagnosis is just as applicable to troubleshooting complex systems as it is

Pitfalls in responding to surprising behaviors in complex systems have exotic and novel characteristics. They are the things that what make Post-Mortem meetings dramatic; the can often include stories of surprising turns of attention, focus, and results that makes troubleshooting more of a mystery than anything. Dietrich Dörner, in his 1980 article “On The Difficulties People Have In Dealing With Complexity“, he gave some characteristics of response in escalating scenarios. These might sound familiar to anyone who has experienced team troubleshooting during an outage:

…[people] tend to neglect how processes develop over time (awareness of rates) versus assessing how things are in the moment.

…[people] have difficulty in dealing with exponential developments (hard to imagine how fast things can change, or accelerate)

…[people] tend to think in causal series (A, therefore B), as opposed to causal nets (A, therefore B and C, therefore D and E, etc.)

I was lucky enough to talk a bit more in detail about Resilient Response In Complex Systems at QCon in London this past year.

Learning

This is knowing what has happened, and dealing with the factual in systems. Everyone wants to believe that their team or group or company has the ability to learn, right? A hallmark of good engineering is empirical observation that results in future behavior changes. Like I mentioned above, this is the place where Post-Mortems usually come into play. At this point I think our field ought to be familiar with Post-Mortem meetings and the general structure and goal of them: to glean as much information about an incident, an outage, a surprising result, a mistake, etc. and spread those observations far and wide within the organization in order to prevent them from happening in the future.

I’m obviously a huge fan of Post-Mortems and what they can do to improve an organization’s behavior and performance. But a lesser-known tool for learning is the “Near-Miss” opportunities we see in normal, everyday work. An engineer performs an action, and realizes later that it was wrong or somehow produced a result that is surprising. When those happen, we can hold them up high, for all to see and learn from. Did they cause damage? No, that’s why they “missed.”

One of the godfathers of cognitive engineering, James Reason, said that “near-miss” events are excellent learning opportunities for organizations, because they:

- Can act like safety “vaccines” for an organization, because they are just a little bit of failure that doesn’t really hurt.

- They happen much more often than actual systemic failures, so they provide a lot more data on latent failures.

- They are a powerful reminder of hazards, therefore keeping the “constant sense of unease” that is needed to provide resilience in a system.

I’ll add that encouraging engineers to share the details of their near-misses has a positive side effect on the culture of the organization. At Etsy, you will see (from time to time) an email to the whole team from an engineer that has the form:

Dear Everybody,

This morning I went to do X, so I did Y. Boy was that a bad idea! Not only did it not do what I thought it was going to, but also it almost brought the site down because of Z, which was a surprise to me. So whatever you do, don’t make the same mistake I did. In the meantime, I’m going to see what I can do to prevent that from happening.

Love,

Joe Engineer

For one, it provides the confirmation that anyone, at any time, no matter their seniority level, can make a mistake or act on faulty assumptions. The other benefit is that it sends the message that admitting to making a mistake is acceptable and encouraged, and that people should feel safe in admitting to these sometimes embarrassing events.

This last point is so powerful that it’s hard to emphasize it more. It’s related to encouraging a Just Culture, something that I wrote about recently over at Code As Craft, Etsy’s Engineering blog.

The last bit I wanted to mention about learning is purposefully not incident-related. One of the basic tenets of Resilience Engineering is that safety is not the absence of incidents an failures; it’s the presence of actions, behaviors, and culture (all along the lines of the four cornerstones above) that causes an organization to be safe. Learning from failures means that the surface area to learn from is not all that large. To be clear, most organizations see successes much much more often than they do failures.

One such focus might be changes. If, across 100 production deploys, you had 9 change-related outages, which should you learn from? Should you be satisfied to look at those nine, have postmortems, and then move forward, safe in the idea that you’ve rid yourself of the badness? Or should you also look at the 91 deploys, and gather some hypothesis about why they ended up ok? You can learn from 9 events, or 91. The argument here is that you’ll be safer by learning from both.

So in addition to learning from why things go wrong, we ought to learn just as much from why do things go right? Why didn’t your site go down today? Why aren’t you having an outage right now? This is likely due to a huge number of influences and reasons, all worth exploring.

….

Ok, so I lied. I didn’t expect this to be such a long post. I do find the four cornerstones to be a good lens with which to think and speak about resilience and complex systems. It’s as much of my vocabulary now as OODA and CAP is. 🙂

Great article. I tweeted about your GameDay exercises – that takes courage and confidence in your infrastructure! I also loved your point about near misses – I recently wrote an article on my blog exploring that topic in more detail: http://www.basilv.com/psd/blog/2012/applying-medical-near-misses-to-it

Pingback: Complex Systems » Mind End | Mind End

Great point about analyzing 91 success vs. post mortem on 9 fails.

Related – read about godfather of counter intuitive engineering Abraham Wald http://en.wikipedia.org/wiki/Abraham_Wald

http://www.motherjones.com/kevin-drum/2010/09/counterintuitive-world

Pingback: VMware #CloudOps Friday Reading List – Standardization in the Cloud Era | VMware CloudOps - VMware Blogs