(this is also posted on O’Reilly’s Radar blog. Much thanks to Daniel Schauenberg, Morgan Evans, and Steven Shorrock for feedback on this)

Before I begin this post, let me say that this is intended to be a critique of the Five Whys method, not a criticism of the people who are in favor of using it.

This critique I present is hardly original; most of this post is inspired by Todd Conklin, Sidney Dekker, and Nancy Leveson.

The concept of post-hoc explanation (or “postmortems” as they’re commonly known) has, at this point, taken hold in the web engineering and operations domain. I’d love to think that the concepts that we’ve taken from the New View on ‘human error’ are becoming more widely known and that people are looking to explore their own narratives through those lenses.

I think that this is good, because my intent has always been (might always be) to help translate concepts from one domain to another. In order to do this effectively, we need to know also what to discard (or at least inspect critically) from those other domains.

The Five Whys is such an approach that I think we should discard.

This post explains my reasoning for discarding it, and how using it has the potential to be harmful, not helpful, to an organization. Here’s how I intend on doing this: I’m first going to talk about what I think are deficiencies in the approach, suggest an alternative, and then ask you to simply try the alternative yourself.

Here is the “bottom line, up front” gist of my assertions:

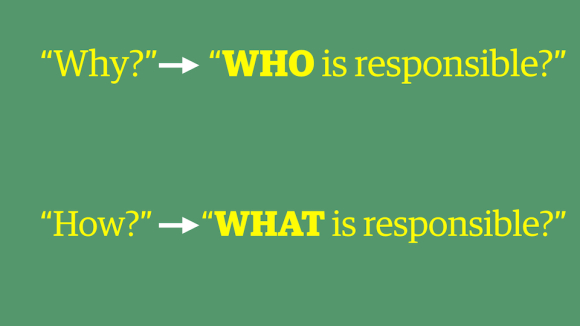

“Why?” is the wrong question.

In order to learn (which should be the goal of any retrospective or post-hoc investigation) you want multiple and diverse perspectives. You get these by asking people for their own narratives. Effectively, you’re asking “how?”

Asking “why?” too easily gets you to an answer to the question “who?” (which in almost every case is irrelevant) or “takes you to the ‘mysterious’ incentives and motivations people bring into the workplace.”

Asking “how?” gets you to describe (at least some) of the conditions that allowed an event to take place, and provides rich operational data.

Asking a chain of “why?” assumes too much about the questioner’s choices, and assumes too much about each answer you get. At best, it locks you into a causal chain, which is not how the world actually works. This is a construction that ignores a huge amount of complexity in an event, and it’s the complexity that we want to explore if we have any hope of learning anything.

But It’s A Great Way To Get People Started!

The most compelling argument to using the Five Whys is that it’s a good first step towards doing real “root cause analysis” – my response to that is twofold:

- “Root Cause Analysis*” isn’t what you should be doing anyway, and

- It’s only a good “first step” because it’s easy to explain and understand, which makes it easy to socialize. The issue with this is that the concepts that the Five Whys depend on are not only faulty, but can be dangerous for an organization to embrace.

If the goal is learning (and it should be) then using a method of retrospective learning should be confident in how it’s bringing to light data that can be turned into actionable information. The issue with the Five Whys is that it’s tunnel-visioned into a linear and simplistic explanation of how work gets done and events transpire. This narrowing can be incredibly problematic.

In the best case, it can lead an organization to think they’re improving on something (or preventing future occurrences of events) when they’re not.

In the worst case, it can re-affirm a faulty worldview of causal simplification and set up a structure where individuals don’t feel safe in giving their narratives because either they weren’t asked the right “why?” question or the answer that a given question pointed to ‘human error’ or individual attributes as causal.

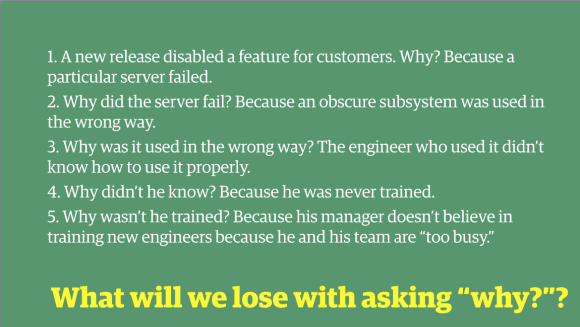

Let’s take an example. From my tutorials at the Velocity Conference in New York, I used an often-repeated straw man to illustrate this:

This is the example of the Five Whys found in the Web Operations book, as well.

This causal chain effectively ends with a person’s individual attributes, not with a description of the multiple conditions that allow an event like this to happen. Let’s look into some of the answers…

“Why did the server fail? Because an obscure subsystem was used in the wrong way.”

This answer is dependent on the outcome. We know that it was used in the “wrong” way only because we’ve connected it to the resulting failure. In other words, we as “investigators” have the benefit of hindsight. We can easily judge the usage of the server because we know the outcome. If we were to go back in time and ask the engineer(s) who were using it: “Do you think that you’re doing this right?” they would answer: yes, they are. We want to know what are the various influences that brought them to think that, which simply won’t fit into the answer of “why?”

The answer also limits the next question that we’d ask. There isn’t any room in the dialogue to discuss things such as the potential to use a server in the wrong way and it not result in failure, or what ‘wrong’ means in this context. Can the server only be used in two ways – the ‘right’ way or the ‘wrong’ way? And does success (or, the absence of a failure) dictate which of those ways it was used? We don’t get to these crucial questions.

“Why was it used in the wrong way? The engineer who used it didn’t know how to use it properly.”

This answer is effectively a tautology, and includes a post-hoc judgement. It doesn’t tell us anything about how the engineer did use the system, which would provide a rich source of operational data, especially for engineers who might be expected to work with the system in the future. Is it really just about this one engineer? Or is it possibly about the environment (tools, dashboards, controls, tests, etc.) that the engineer is working in? If it’s the latter, how does that get captured in the Five Whys?

So what do we find in this chain we have constructed above? We find:

- an engineer with faulty (or at least incomplete) knowledge

- insufficient indoctrination of engineers

- a manager who fouls things up by not being thorough enough in the training of new engineers (indeed: we can make a post-hoc judgement about her beliefs)

If this is to be taken as an example of the Five Whys, then as an engineer or engineering manager, I might not look forward to it, since it focuses on our individual attributes and doesn’t tell us much about the event other than the platitude that training (and convincing people about training) is important.

These are largely answers about “who?” not descriptions of what conditions existed. In other words, by asking “why?” in this way, we’re using failures to explain failures, which isn’t helpful.

If we ask: “Why did a particular server fail?” we can get any number of answers, but one of those answers will be used as the primary way of getting at the next “why?” step. We’ll also lose out on a huge amount of important detail, because remember: you only get one question before the next step.

If instead, we were to ask the engineers how they went about implementing some new code (or ‘subsystem’), we might hear a number of things, like maybe:

- the approach(es) they took when writing the code

- what ways they gained confidence (tests, code reviews, etc.) that the code was going to work in the way they expected it before it was deployed

- what (if any) history of success or failure have they had with similar pieces of code?

- what trade-offs they made or managed in the design of the new function?

- how they judged the scope of the project

- how much (and in what ways) they experienced time pressure for the project

- the list can go on, if you’re willing to ask more and they’re willing to give more

Rather than judging people for not doing what they should have done, the new view presents tools for explaining why people did what they did. Human error becomes a starting point, not a conclusion. (Dekker, 2009)

When we ask “how?”, we’re asking for a narrative. A story.

In these stories, we get to understand how people work. By going with the “engineer was deficient, needs training, manager needs to be told to train” approach, we might not have a place to ask questions aimed at recommendations for the future, such as:

- What might we put in place so that it’s very difficult to put that code into production accidentally?

- What sources of confidence for engineers could we augment?

As part of those stories, we’re looking to understand people’s local rationality. When it comes to decisions and actions, we want to know how it made sense for someone to do what they did. And make no mistake: they thought what they were doing made sense. Otherwise, they wouldn’t have done it.

Again, I’m not original with this thought. Local rationality (or as Herb Simon called it, “bounded rationality”) is something that sits firmly atop some decades of cognitive science.

These stories we’re looking for contain details that we can pull on and ask more about, which is critical as a facilitator of a post-mortem debriefing, because people don’t always know what details are important. As you’ll see later in this post, reality doesn’t work like a DVR; you can’t pause, rewind and fast-forward at will along a singular and objective axis, picking up all of the pieces along the way, acting like CSI. Memories are faulty and perspectives are limited, so a different approach is necessary.

Not just “how”

In order to get at these narratives, you need to dig for second stories. Asking “why?” will get you an answer to first stories. These are not only insufficient answers, they can be very damaging to an organization, depending on the context. As a refresher…

From Behind Human Error here’s the difference between “first” and “second” stories of human error:

| First Stories | Second Stories |

| Human error is seen as cause of failure | Human error is seen as the effect of systemic vulnerabilities deeper inside the organization |

| Saying what people should have done is a satisfying way to describe failure | Saying what people should have done doesn’t explain why it made sense for them to do what they did |

| Telling people to be more careful will make the problem go away | Only by constantly seeking out its vulnerabilities can organizations enhance safety |

Now, read again the straw-man example of the Five Whys above. The questions that we ask frame the answers that we will get in the form of first stories. When we ask more and better questions (such as “how?”) we have a chance at getting at second stories.

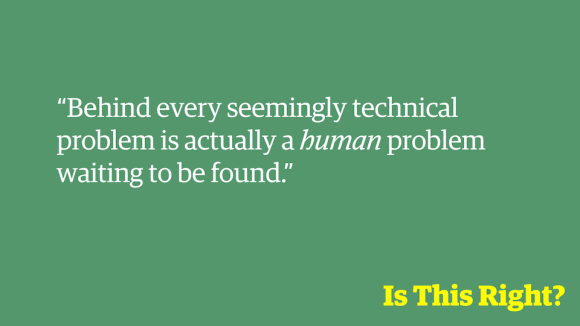

You might wonder: how did I get from the Five Whys to the topic of ‘human error’? Because once ‘human error’ is a candidate to reach for as a cause (and it will, because it’s a simple and potentially satisfying answer to “why?”) then you will undoubtedly use it.

At the beginning of my tutorial in New York, I asked the audience this question:

At the beginning of the talk, a large number of people said yes, this is correct. Steven Shorrock (who is speaking at Velocity next week in Barcelona on this exact topic) has written a great article on this way of thinking: If It Weren’t For The People. By the end of my talk, I was able to convince them that this is also the wrong focus of a post-mortem description.

This idea accompanies the Five Whys more often than not, and there are two things that I’d like to shine some light on about it:

Myth of the “human or technical failure” dichotomy

This is dualistic thinking, and I don’t have much to add to this other than what Dekker has said about it (Dekker, 2006):

“Was the accident caused by mechanical failure or by human error? It is a stock question in the immediate aftermath of a mishap. Indeed, it seems such a simple, innocent question. To many it is a normal question to ask: If you have had an accident, it makes sense to find out what broke. The question, however, embodies a particular understanding of how accidents occur, and it risks confining our causal analysis to that understanding. It lodges us into a fixed interpretative repertoire. Escaping from this repertoire may be difficult. It sets out the questions we ask, provides the leads we pursue and the clues we examine, and determines the conclusions we will eventually draw.”

Myth: during a retrospective investigation, something is waiting to be “found”

I’ll cut to the chase: there is nothing waiting to be found, or “revealed.” These “causes” that we’re thinking we’re “finding”? We’re constructing them, not finding them. We’re constructing them because we are the ones that are choosing where (and when) to start asking questions, and where/when to stop asking the questions. We’ve “found” a root cause when we stop looking. And in many cases, we’ll get lazy and just chalk it up to “human error.”

As Erik Hollnagel has said (Hollnagel, 2009, p. 85):

“In accident investigation, as in most other human endeavours, we fall prey to the What-You-Look-For-Is-What-You-Find or WYLFIWYF principle. This is a simple recognition of the fact that assumptions about what we are going to see (What-You-Look-For), to a large extent will determine what we actually find (What-You-Find).”

More to the point: “What-You-Look-For-Is-What-You-Fix”

We think there is something like the cause of a mishap (sometimes we call it the root cause, or primary cause), and if we look in the rubble hard enough, we will find it there. The reality is that there is no such thing as the cause, or primary cause or root cause . Cause is something we construct, not find. And how we construct causes depends on the accident model that we believe in. (Dekker, 2006)

Nancy Leveson comments on this in her excellent book Engineering a Safer World this idea (p.20):

Subjectivity in Selecting Events

The selection of events to include in an event chain is dependent on the stopping rule used to determine how far back the sequence of explanatory events goes. Although the first event in the chain is often labeled the ‘initiating event’ or ‘root cause’ the selection of an initiating event is arbitrary and previous events could always be added.

Sometimes the initiating event is selected (the backward chaining stops) because it represents a type of event that is familiar and thus acceptable as an explanation for the accident or it is a deviation from a standard [166]. In other cases, the initiating event or root cause is chosen because it is the first event in the backward chain for which it is felt that something can be done for correction.

The backward chaining may also stop because the causal path disappears due to lack of information. Rasmussen suggests that a practical explanation for why actions by operators actively involved in the dynamic flow of events are so often identified as the cause of an accident is the difficulty in continuing the backtracking “through” a human [166].

A final reason why a “root cause” may be selected is that it is politically acceptable as the identified cause. Other events or explanations may be excluded or not examined in depth because they raise issues that are embarrassing to the organization or its contractors or are politically unacceptable.

Learning is the goal. Any prevention depends on that learning.

So if not the Five Whys, then what should you do? What method should you take?

I’d like to suggest an alternative, which is to first accept the idea that you have to actively seek out and protect the stories from bias (and judgement) when you ask people “how?”-style questions. Then you can:

- Ask people for their story without any replay of data that would supposedly ‘refresh’ their memory

- Tell their story back to them and confirm you got their narrative correct

- Identify critical junctures

- Progressively probe and re-build how the world looked to people inside of the situation at each juncture.

As a starting point for those probing questions, we can look to Gary Klein and Sidney Dekker for the types of questions you can ask instead of “why?”…

Debriefing Facilitation Prompts

(from The Field Guide To Understanding Human Error, by Sidney Dekker)

At each juncture in the sequence of events (if that is how you want to structure this part of the accident story), you want to get to know:

- Which cues were observed (what did he or she notice/see or did not notice what he or she had expected to notice?)

- What knowledge was used to deal with the situation? Did participants have any experience with similar situations that was useful in dealing with this one?

- What expectations did participants have about how things were going to develop, and what options did they think they have to influence the course of events?

- How did other influences (operational or organizational) help determine how they interpreted the situation and how they would act?

Here are some questions Gary Klein and his researchers typically ask to find out how the situation looked to people on the inside at each of the critical junctures:

| Cues | What were you seeing?

What were you focused on? What were you expecting to happen? |

| Interpretation | If you had to describe the situation to your colleague at that point, what would you have told? |

| Errors | What mistakes (for example in interpretation) were likely at this point? |

| Previous knowledge/experience |

Were you reminded of any previous experience? Did this situation fit a standard scenario? Were you trained to deal with this situation? Were there any rules that applied clearly here? Did any other sources of knowledge suggest what to do? |

| Goals | What were you trying to achieve?Were there multiple goals at the same time?Was there time pressure or other limitations on what you could do? |

| Taking Action | How did you judge you could influence the course of events?

Did you discuss or mentally imagine a number of options or did you know straight away what to do? |

| Outcome | Did the outcome fit your expectation? Did you have to update your assessment of the situation? |

| Communications | What communication medium(s) did you prefer to use? (phone, chat, email, video conf, etc.?)

Did you make use of more than one communication channels at once? |

| Help |

Did you ask anyone for help? What signal brought you to ask for support or assistance? Were you able to contact the people you needed to contact? |

For the tutorials I did at Velocity, I made a one-pager of these: http://bit.ly/DebriefingPrompts

Try It

I have tried to outline some of my reasoning on why using the Five Whys approach is suboptimal, and I’ve given an alternative. I’ll do one better and link you to the tutorials that I gave in New York in October, which I think digs deeper into these concepts. This is in four parts, 45 minutes each.

Part I – Introduction and the scientific basis for post-hoc restrospective pitfalls and learning

Part II – The language of debriefings, causality, case studies, teams coping with complexity

Part IV – Taylorism, normal work, ‘root cause’ of software bugs in cars, Q&A

My request is that the next time that you would do a Five Whys, that you instead ask “how?” or the variations of the questions I posted above. If you think you get more operational data from a Five Whys and are happy with it, rock on.

If you’re more interested in this alternative and the fundamentals behind it, then there are a number of sources you can look to. You could do a lot worse than starting with Sidney Dekker’s Field Guide To Understanding Human Error.

An Explanation

For those readers who think I’m too unnecessarily harsh on the Five Whys approach, I think it’s worthwhile to explain why I feel so strongly about this.

Retrospective understanding of accidents and events is important because how we make sense of the past greatly and almost invisibly influences our future. At some point in the not-so-distant past, the domain of web engineering was about selling books online and making a directory of the web. These organizations and the individuals who built them quickly gave way to organizations that now build cars, spacecraft, trains, aircraft, medical monitoring devices…the list goes on…simply because software development and distributed systems architectures are at the core of modern life.

The software worlds and the non-software worlds have collided and will continue to do so. More and more “life-critical” equipment and products rely on software and even the Internet.

Those domains have had varied success in retrospective understanding of surprising events, to say the least. Investigative approaches that are firmly based on causal oversimplification and the “Bad Apple Theory” of deficient individual attributes (like the Five Whys) have shown to not only be unhelpful, but objectively made learning harder, not easier. As a result, people who have made mistakes or involved in accidents have been fired, banned from their profession, and thrown in jail for some of the very things that you could find in a Five Whys.

I sometimes feel nervous that these oversimplifications will still be around when my daughter and son are older. If they were to make a mistake, would they be blamed as a cause? I strongly believe that we can leave these old ways behind us and do much better.

My goal is not to vilify an approach, but to state explicitly that if the world is to become safer, then we have to eschew this simplicity; it will only get better if we embrace the complexity, not ignore it.

Epilogue: The Longer Version For Those Who Have The Stomach For Complexity Theory

The Five Whys approach follows a Newtonian-Cartesian worldview. This is a worldview that is seductively satisfying and compellingly simple. But it’s also false in the world we live in.

What do I mean by this?

There are five areas why the Five Whys firmly sits in a Newtonian-Cartesian worldview that we should eschew when it comes to learning from past events. This is a Cliff Notes version of “The complexity of failure: Implications of complexity theory for safety investigations” –

First, it is reductionist. The narrative built by the Five Whys sits on the idea that if you can construct a causal chain, then you’ll have something to work with. In other words: to understand the system, you pull it apart into its constituent parts. Know how the parts interact, and you know the system.

Second, it assumes what Dekker has called “cause-effect symmetry” (Dekker, complexity of failure):

“In the Newtonian vision of the world, everything that happens has a definitive, identifiable cause and a definitive effect. There is symmetry between cause and effect (they are equal but opposite). The determination of the ‘‘cause’’ or ‘‘causes’’ is of course seen as the most important function of accident investigation, but assumes that physical effects can be traced back to physical causes (or a chain of causes-effects) (Leveson, 2002). The assumption that effects cannot occur without specific causes influences legal reasoning in the wake of accidents too. For example, to raise a question of negligence in an accident, harm must be caused by the negligent action (GAIN, 2004). Assumptions about cause-effect symmetry can be seen in what is known as the outcome bias (Fischhoff, 1975). The worse the consequences, the more any preceding acts are seen as blameworthy (Hugh and Dekker, 2009).”

John Carroll (Carroll, 1995) called this “root cause seduction”:

The identification of a root cause means that the analysis has found the source of the event and so everyone can focus on fixing the problem. This satisfies people’s need to avoid ambiguous situations in which one lacks essential information to make a decision (Frisch & Baron, 1988) or experiences a salient knowledge gap (Loewenstein, 1993). The seductiveness of singular root causes may also feed into, and be supported by, the general tendency to be overconfident about how much we know (Fischhoff,Slovic,& Lichtenstein, 1977).

That last bit about a tendency to be overconfident about how much we know (in this context, how much we know about the past) is a strong piece of research put forth by Baruch Fischhoff, who originally researched what we now understand to be the Hindsight Bias. Not unsurprisingly, Fischhoff’s doctoral thesis advisor was Daniel Kahneman (you’ve likely heard of him as the author of Thinking Fast and Slow), whose research in cognitive biases and heuristics everyone should at least be vaguely familiar with.

The third issue with this worldview, supported by the idea of Five Whys and something that follows logically from the earlier points is that outcomes are foreseeable if you know the initial conditions and the rules that govern the system. The reason that you would even construct a serial causal chain like this is because

The fourth part of this is that time is irreversible. We can’t look to a causal chain as something that you can fast-forward and rewind, no matter how attractively simple that seems. This is because the socio-technical systems that we work on and work in are complex in nature, and are dynamic. Deterministic behavior (or, at least predictability) is something that we look for in software; in complex systems this is a foolhardy search because emergence is a property of this complexity.

And finally, there is an underlying assumption that complete knowledge is attainable. In other words: we only have to try hard enough to understand exactly what happened. The issue with this is that success and failure have many contributing causes, and there is no comprehensive and objective account. The best that you can do is to probe people’s perspectives at juncture points in the investigation. It is not possible to understand past events in any way that can be considered comprehensive.

Dekker (Dekker, 2011):

As soon as an outcome has happened, whatever past events can be said to have led up to it, undergo a whole range of transformations (Fischhoff and Beyth, 1975; Hugh and Dekker, 2009). Take the idea that it is a sequence of events that precedes an accident. Who makes the selection of the ‘‘events’’ and on the basis of what? The very act of separating important or contributory events from unimportant ones is an act of construction, of the creation of a story, not the reconstruction of a story that was already there, ready to be uncovered. Any sequence of events or list of contributory or causal factors already smuggles a whole array of selection mechanisms and criteria into the supposed ‘‘re’’construction. There is no objective way of doing this–all these choices are affected, more or less tacitly, by the analyst’s background, preferences, experiences, biases, beliefs and purposes. ‘‘Events’’ are themselves defined and delimited by the stories with which the analyst configures them, and are impossible to imagine outside this selective, exclusionary, narrative fore-structure (Cronon, 1992).

Here is a thought exercise: what if we were to try to use the Five Whys for finding the “root cause” of a success?

Why didn’t we have failure X today?

Now this question gets a lot more difficult to have one answer. This is because things go right for many reasons, and not all of them obvious. We can spend all day writing down reasons why we didn’t have failure X today, and if we’re committed, we can keep going.

So if success requires “multiple contributing conditions, each necessary but only jointly sufficient” to happen, then how is it that failure only requires just one? The Five Whys, as its commonly presented as an approach to improvement (or: learning?), will lead us to believe that not only is just one condition sufficient, but that condition is a canonical one, to the exclusion of all others.

* RCA, or “Root Cause Analysis” can also easily turn into “Retrospective Cover of Ass”

References

Carroll, J. S. (1995). Incident Reviews in High-Hazard Industries: Sense Making and Learning Under Ambiguity and Accountability. Organization & Environment, 9(2), 175—197. doi:10.1177/108602669500900203

Dekker, S. (2004). Ten questions about human error: A new view of human factors and system safety. Mahwah, N.J: Lawrence Erlbaum.

Dekker, S., Cilliers, P., & Hofmeyr, J.-H. (2011). The complexity of failure: Implications of complexity theory for safety investigations. Safety Science, 49(6), 939—945. doi:10.1016/j.ssci.2011.01.008

Hollnagel, E. (2009). The ETTO principle: Efficiency-thoroughness trade-off : why things that go right sometimes go wrong. Burlington, VT: Ashgate.

Leveson, N. (2012). Engineering a Safer World. Mit Press.

Hello John,

I like your writing and this subject very much, but I really disagree with your conclusions about root cause analysis. Respectfully, using “5 Whys” (and its many faults) as the means to bash “root cause analysis” in general is kind of a weak argument. Claiming that complexity erases causality from the universe is an even worse argument — it is oft-stated, but is never validated or proven.

How does an effect occur? It is produced by something, i.e., it is caused! Perhaps complex system interactions make the tracing of cause-effect relationships difficult, but that’s no proof that such relationships do not exist. Difficulty is no excuse for not trying. Besides, how else are you going to find all the factors that explain HOW the effect occurred?

I don’t mean to be insulting here, but I really don’t see how “complex systems + safety + human factors” people are always so stuck on the models of root cause analysis that were in vogue 20 to 30 years ago. We’ve moved on! We read Sidney Dekker’s Field Guide when it first came out, we recognized and employed the idea that “people did what they did because it made sense to them at the time”, we championed the idea that identifying “human error” as a root cause was morally bankrupt. We bought into the ideas that systems are complex, that effects may be far removed from initiating disturbances, that a huge number of factors individually in-spec could combine to create an effect of unexpected magnitude, that organizations / process / systems are the places to find fundamental root causes, etc. etc. etc.

I mean, come on! “This causal chain effectively ends with a person’s individual attributes, not with a description of the multiple conditions that allow an event like this to happen.” Is that what you think we’re really doing? Really?! How can you totally misunderstand what it is that we do as root cause investigators and analysts?

Here, take a look at a few things I’ve written about these topics over the years, then tell me where I’m going wrong.

http://www.bill-wilson.net/b34 – Phases of Root Cause Analysis

http://www.bill-wilson.net/b86 – Optimization is not the Goal

http://www.bill-wilson.net/b46 – Interfaces and Contradictions

http://www.bill-wilson.net/b64 – Human Error

There’s more, but these are probably the most relevant… and note that these were all written like 9 or 10 years ago. These are not new ideas we’re talking about here!

Respectfully,

Bill Wilson

Very thorough write-up, I would only add that what I found really helps with all of this questioning is taking a design oriented approach to looking at your systems. Outages require machine failure of some level, if we take the position that software should be written not just to do a thing but to promote a proper outcome, it helps keep the focus on the systems we have built, and how to improve them, rather than the operators. I’ll be expanding on this idea in my upcoming Velocity talk as well 🙂

Bill Wilson –

I appreciate your comment! I’m not addressing your field in this post, I’m addressing mine. 🙂

Some thoughts:

– I haven’t stated that complexity *erases* causality. I’m arguing against the oversimplification of causality, which I think you’ll agree comes from linear and sequential models. The Five Whys is such a linear/sequential model as it’s generally practiced, and explained in my domain at least. Many of the people who I have seen evolve the 5W approach (such as Dan Milstein, here: http://www.slideshare.net/danmil30/how-to-run-a-5-whys-with-humans-not-robots) who have changed/augmented the approach enough that I wouldn’t really call it the 5W anymore.)

– The methods and approaches that I’m suggesting: yes, indeed, they are many years old! And yet they are not commonplace. In your field, they may. But not in my domain. (Ex: http://www.fastcodesign.com/1669738/to-get-to-the-root-of-a-hard-problem-just-ask-why-five-times) My post above is aimed not at RCA, per se, but the current accepted thinking on the Five Whys. Much respect to the Lean Startup effort, but this is the approach I am not agreeing with: http://www.youtube.com/watch?v=JmrAkHafwHI

– A number of places in your writing reflect my same perspective.

(An example: “However, don’t get tricked into thinking that a ’cause’ is always a single, self-contained entity or unit.” – from http://www.bill-wilson.net/b46) You touch on the lure of counterfactuals, and cognitive biases and pitfalls in narratives. You have full agreement with me on these ideas.

– I would like to agree with you on this (http://www.bill-wilson.net/systems-thinking-complexity-and-root-cause), but I cannot: “root cause analysis can be (and frequently is) conducted from a systems thinking viewpoint, with knowledge of complex systems phenomena and a focus on understanding the system rather than fixating on blame and human error.” – I have yet to see any RCA practice in my industry take this approach/view.

– The idea that the world (not yours, but other’s such as mine) have “moved on” from the thinking and approaches of 20 or 30 years ago is mistaken. Yours is a nuanced perspective that is not understood or known in most small, medium, or large-scale web operations. “Multiple contributing conditions, each necessary but jointly sufficient” is not where I can say (with confidence) my industry is yet; they are at a->b->c->d->e, and it’s linear/sequential the entire way, like the assembly line at Toyota was. Search for (“root cause” AND outage) and you’ll get a glimpse that systemic accident models are not yet embraced or known.

– What I’m proposing above looks much more like process tracing/protocol analysis (yes, human factors methods) than it does causal trees. My suggestion that “how?” questions will get us more operational data in the construction of a narrative than “why?” essentially comes from Todd Conklin, and I think that outside of using a systemic accident model, brings a high potential for learning.

– If accident models are what people are looking for, then I would much rather see Hollnagel’s FRAM, Leveson’s CAST, or Rasmussen’s AcciMap approach be part of the dialogue, as opposed to the FiveWhys, fishbone, FTA, or Swiss Cheese Model.

Again, thanks for the comment. This post is not an attack on how you approach RCA, it’s a critique of the FiveWhys. There is a lot more nuance in the Velocity tutorial videos I linked to that unpacks it a bit more.

– allspaw

Hi John!

Have you come across Cause-Effect-Diagrams? http://blog.crisp.se/2009/09/29/henrikkniberg/1254176460000

They don’t address your initial points (“Why” is the wrong question; What’s a root cause anyway?) but I’ve still found them valuable: easy to facilitate and vastly more applicable than 5 Whys. Here’s my experience when I first tried them:

http://finding-marbles.com/2011/08/04/cause-effect-diagrams/

Best regards,

Corinna

Tx Allspaw for your thorough explanation as to how a specific technique, the 5 Why’s, can lead to selective and biased cause that quite often can lead to the wrong cause. I like the idea of focusing on ‘how’ and, dare I say, ‘what’ has happened to lead to this error?’ would open the conversation to possibly more issues rather than a linear cause-effect analysis. The question ultimately though is ‘what to act on assuming there are multiple causes leading to the error?’ Due to capacity issues (which is the norm for most companies) a good prioritization model is required and the use of salient criteria to define what makes one cause more important to work on than another. The determination of this criteria would also be biased as it would be selective based on someone or some group’s judgement. It seems therefore that no matter what we used to define and resolve error there will always be a ‘selective’ or ‘biased’ component. We can only hope that the selection as to what to focus on takes into consideration criteria that looks more from a ‘systemic’ or ‘larger picture’ perspective then a ‘person’ or ‘departmental’ perspective. At the end of the day bias will always be present in these models – it’s our willingness though to quickly self-correct and be open to how we deal with the bias should our conclusions be shown to be wrong.

Michael – Thanks for the comment!

The point you make is absolutely true. Not only is work in complex systems met with what Hollnagel called an “efficiency-thoroughness trade-off” (ETTO) but we could also say that an investigation or post-hoc retrospective look at an event is constrained by ETTO as well. In other words, the length and effort involved with an investigation is always negotiable, and that where we choose to focus our recommendations or remediations is negotiable as well. What this means is that (hopefully) judgement will be made about focusing effort. I would say that that is a feature, not a bug. The most important part about capturing a diverse and wide group of narratives from individuals (not simply answers to questions like ‘why’) is that you’re including (and sometimes deferring) to those closest to the work on the creation of actions to take next – hopefully preventative actions. This mix of individual narratives and organizational context is important. The US Forestry Service is doing excellent work in this area. They are looking for an accident investigation to produce multiple products of learning that includes both of those perspectives.

Jens Rasmussen wrote a seminal paper that touches on these topics that I still re-read quite often: http://sunnyday.mit.edu/16.863/rasmussen-safetyscience.pdf

What you point out is that organizationally, these events and what learning we can extract from them are context-dependent. This is great validation that the blog post got the point across.

Thanks very much for this. 🙂

Hi there John…

I appreciated reading your post this morning. I’ve been a root cause investigator for 15 years and it’s amazing to see the field growing within IT service management. Most of our work takes place in Safety, Quality, and Reliability… but over the last few years, IT Problem Management has been the fastest-growing area. Like Bill Wilson said above, any of us who do this for a living have moved well past the 5-whys or the Ishikawa Fishbone processes. You’ve identified our major gripes with the 5-whys – it’s linear, reductionist, often points to human error, and presupposes that a single “silver bullet” cause exists (one cause to rule them all), when there is no such thing. Yet, the 5-whys persists. There are multiple causes for this, but three big contributors are 1) It’s simple, 2) It seems to make sense, and 3) It’s free. Making the argument that everyone should ditch the 5-whys is like making the argument that they should stay away from fast-food and cook their own meals. Of course they should. But even when you explain it (and sometimes even get some to agree with you) many still won’t change. No one budgets for problem-solving when planning a project, web-dev or otherwise. So when problems inevitably come up, they aren’t equipped to respond. Completing the investigation and getting back on task becomes the problem. The 5-whys is cheap, quick, easy, and provides the illusion that a root cause investigation has been completed. Therefore work can begin again. If the problem doesn’t happen again, it reinforces the validity of the method, when in all likelihood the 5-whys investigation did absolutely nothing to reduce risk. The problem didn’t happen again because it just didn’t.

I agree with your message! Web dev firms, please don’t fall into the trap of incorporating 5-whys! However, don’t throw the baby out with the bath either. If you’ve seen one type of root cause analysis, you’ve seen one type of root cause analysis. My own experience is that an RCA method based on logical causal relationships is extremely helpful in modeling the complexities of an event (adverse or positive in outcome), including the human factors. I’ll even go out on a limb to say that a logical, evidence-based causal model coupled with an experienced facilitator is the fastest, most effective way of accounting for the complex interactions that precede an adverse event and identifying an effective set of solutions that mitigate the risk of recurrence.

Hi, I I’m not sure which definition of “The Five Whys” you are referring to but the first one that comes to my mind is the Toyota one. And, if we are talking about this one, I think this was very badly represented in this article. To me It looks like you are arguing against the wrong application and interpretation of the Five whys.

I’m quite sure you are talking about reality here: someone in a company reads a blog about the Five Whys and tries to apply it leading to all the bad effects you address.

But I did not found anything of this in the beautiful book Toyota Kata where the Five Whys are described exactly as your “Hows”: deep and neutral observation of the event to fully understand it. The tendency to rush to a solution is described as something to avoid.

Pingback: Remediating “escaped defects” within continuous delivery | Chef Blog

Pingback: Professional Development – 2015 – Week 48

When applying tools like the 5-Whys people often forget the importance of context. I first came across the 5 Whys in the mid 90s. I worked for a semiconductor test equipment manufacturer who had embraced TQM. What we found was the TQM toolset (which included 5 Whys root cause analysis) worked really well in manufacturing, but we didn’t see the same results when applying it to Software…

At the time we had no real explanation as to why. Intuitively we knew that Software was very different to Manufacturing, so stuck with what we knew worked well for software… (which incidentally looked a lot like the Agile practices of today) but we struggled explaining this to senior management who were heavily bought into TQM.

It was only years later that I came across an explanation as to why. The reason is Complexity. Manufacturing is simple, with clear relationships between cause and effect. Software just isn’t like that, it is complex, where cause and effect is not easily determined.

The simple answer is to remember that no tool is universally applicable. Try stuff in your context, and to stick with what works.

Pingback: Five Blogs – 26 May 2016 – 5blogs

Pingback: SRE Weekly Issue #39 – SRE WEEKLY

The “5 whys” and deeper problem solving methodologies from Lean and Toyota are, of course, not supposed to degrade into asking “who?” There are old human habits of blame to diligent against.

If “naming, blaming, and shaming” is still the default for an organization, asking “how” questions could just as easily devolve into blame and “who?”

I’m not sure if the words matter as much as the mindset and being mindful about not slipping into blame.

Pingback: Kitchen Soap – How Complex Systems Fail: A WebOps Perspective

The first “why” questions has to do with motivation and intention. Motivation is the behind force of our will and may be positive or negative. Intention has to to do with course of action towards the business goals. Both are important and the right answer provides a better understanding of the problem

the five-why method becomes the “how” as your maturity and feedback loop scales up.

The “Five-Whys” however require a great communication team spirit which usually during the fault (or the crash) everybody is trying to avoid (and doesn’t take the blame). If you are working in an organization where “taking responsibility” its a privilege, then five whys are working fine.

I would like to see practical examples when the “five-why” method didn’t work and the reasoning behind

The simplest mistake smart people make. How is born of why.

i appreciate your article, though as others have pointed out you’re really complaining about the misunderstanding and misapplication of 5 Whys. (This is similar to being “one minute managed,” as opposed to the effective feedback the book advocates.)

I will advocate for doing a “5 Why’s” as well as “5 Hows,” realizing you should be looking for three different sets of answers:

– How did it happen? (Causation)

– How could it be prevented (Mitigation)

– Why was it a problem? (Impact)

The last question is possibly more important than the other two. If you can avoid the impact, then it’s no longer a problem. Additionally, this may prevent other types of incidents from becoming a problem. However, this does require you to get to a point where you can do an about face to the 5 Hows to find a solution or mitigation to the impact.

Thanks so much for your comment, David.

There is significant variation across the industry on how the “Five Whys” are actually practiced and my critique was aimed at the common interpretation and expectations.

One of the most popularizing descriptions of the approach is this: https://www.youtube.com/watch?v=_Xe_8smrshY

Which almost certainly doesn’t fit into the frame you’ve given here, which I agree is beyond and much better than the naive application of the approach.

There does exist research about the linguistic signaling that “why” questions don’t always result in what the questioner is genuinely looking for: https://www.adaptivecapacitylabs.com/blog/2020/01/08/tricks-of-the-trade-on-how-versus-why/

Pingback: Asking the Right “Why†Questions – Virus Not OK

It’s rather late but rather than 5 why’s, I like to use 5 w’s and 2 h’s (who, what, why, where, when, how and how much). Information gathering through thorough interrogation (data analysis) but with zero blame. Even adversaries and intentionally malicious actors exploiting systemic weaknesses shouldn’t be blamed, just quietly observed and learned from, to (hopefully!) prevent or at least reduce the likelihood of similar incidents recurring. Remove emotions and focus on the cold hard facts. Thanks for the article.