I thought it might be worth digging in a bit deeper on something that I mentioned in the Advanced Postmortem Fu talk I gave at last year’s Velocity conference.

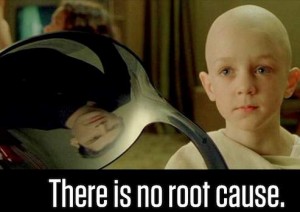

For complex socio-technical systems (web engineering and operations) there is a myth that deserves to be busted, and that is the assumption that for outages and accidents, there is a single unifying event that triggers a chain of events that led to an outage.

This is actually a fallacy, because for complex systems:

there is no root cause.

This isn’t entirely intuitive, because it goes against our nature as engineers. We like to simplify complex problems so we can work on them in a reductionist fashion. We want there to be a single root cause for an accident or an outage, because if we can identify that, we’ve identified the bug that we need to fix. Fix that bug, and we’ve prevented this issue from happening in the future, right?

There’s also strong tendency in causal analysis (especially in our field, IMHO) to find the single place where a human touched something, and point to that as “root” cause. Those dirty, stupid humans. That way, we can put the singular onus on “lack of training”, or the infamously terrible label “human error.” This, of course, isn’t a winning approach either.

But, you might ask, what about the “Five Whys” method of root cause analysis? Starting with the outcome and working backwards towards an originally triggering event along a linear chain feels intuitive, which is why it’s so popular. Plus, those Toyota guys know what they’re talking about. But it also falls prey to the same issue with regard to assumptions surrounding complex failures.

As this excellent post in Workplace Psychology rightly points out limitations with the Five Whys:

An assumption underlying 5 Whys is that each presenting symptom has only one sufficient cause. This is not always the case and a 5 Whys analysis may not reveal jointly sufficient causes that explain a symptom.

There are some other limitations of the Five Whys method outlined there, such as it not being an idempotent process, but the point I want to make here is that linear mental models of causality don’t capture what is needed to improve the safety of a system.

Generally speaking, linear chain-of-events approaches are akin to viewing the past as a line-up of dominoes, and reality with complex systems simply don’t work like that. Looking at an accident this way ignores surrounding circumstances in favor of a cherry-picked list of events, it validates hindsight and outcome bias, and focuses too much on components and not enough on the interconnectedness of components.

During stressful times (like outages) people involved with response, troubleshooting, and recovery also often mis-remember the events as they happened, sometimes unconsciously neglecting critical facts and timings of observations, assumptions, etc. This can obviously affect the results of using a linear accident investigation model like the Five Whys.

However, this identifying a singular root cause and a linear chain that stems from it makes things very easy to explain, understand, and document. This can help us feel confident that we’re going to prevent future occurrences of the issue, because there’s just one thing to fix: the root cause.

Even the eminent cognitive engineering expert James Reason’s epidemiological (the “Swiss Cheese”) model exhibits some of these limitations. While it does help capture multiple contributing causes, the mechanism is still linear, which can encourage people to think that if they only were to remove one of the causes (or fix a ‘barrier’ to a cause in the chain) then they’ll be protected in the future.

I will, however, point out that having an open and mature process of investigating causality, using any model, is a good thing for an organization, and the Five Whys can help kick-off the critical thinking needed. So I’m not specifically knocking the Five Whys as a practice with no value, just that it’s limited in its ability to identify items that can help bring resilience to a system.

Again, this tendency to look for a single root cause for fundamentally surprising (and usually negative) events like outages is ubiquitous, and hard to shake. When we’re stressed for technical, cultural, or even organizationally political reasons, we can feel pressure to get to resolution on an outage quickly. And when there’s pressure to understand and resolve a (perceived) negative event quickly, we reach for oversimplification. Some typical reasons for this are:

- Management wants an answer to why it happened quickly, and they might even look for a reason to punish someone for it. When there’s a single root cause, it’s straightforward to pin it on “the guy who wasn’t paying attention” or “is incompetent”

- The engineers involved with designing/building/operating/maintaining the infrastructure touching the outage are uncomfortable with the topic of failure or mistakes, so the reaction is to get the investigation over with. This encourages oversimplification of the causes and remediation.

- The failure is just too damn complex to keep in one’s head. Hindsight bias encourages counter-factual thinking (“..if only we payed attention, we could have seen this coming!” or “…we should have known better!”) which pushes us into thinking the cause is simple.

So if there’s no singular root cause, what is there?

I agree with Richard Cook’s assertion that failures in complex systems require multiple contributing causes, each necessary but only jointly sufficient.

Hollnagel, Woods, Dekker and Cook point out in this introduction to Resilience Engineering:

Accidents emerge from a confluence of conditions and occurrences that are usually associated with the pursuit of success, but in this combination–each necessary but only jointly sufficient–able to trigger failure instead.

Frankly, I think that this tendency to look for singular root causes also comes from how deeply entrenched modern science and engineering is with the tenets of reductionism. So I blame Newton and Descartes. But that’s for another post. 🙂

Because complex systems have emergent behaviors, not resultant ones, it can be put another way:

Finding the root cause of a failure is like finding a root cause of a success.

So what does that leave us with? If there’s no single root cause, how should we approach investigating outages, degradations, and failures in a way that can help us prevent, detect, and respond to such issues in the future?

The answer is not straightforward. In order to truly learn from outages and failures, systemic approaches are needed, and there are a couple of them mentioned below. Regardless of the implementation, most systemic models recognize these things:

- …that complex systems involve not only technology but organizational (social, cultural) influences, and those deserve equal (if not more) attention in investigation

- …that fundamentally surprising results come from behaviors that are emergent. This means they can and do come from components interacting in ways that cannot be predicted.

- …that nonlinear behaviors should be expected. A small perturbation can result in catastrophically large and cascading failures.

- …human performance and variability are not intrinsically coupled with causes. Terms like “situational awareness” and “crew resource management” are blunt concepts that can mask the reasons why it made sense for someone to act in a way that they did with regards to a contributing cause of a failure.

- …diversity of components and complexity in a system can augment the resilience of a system, not simply bring about vulnerabilities.

For the real nerdy details, Zahid H. Qureshi’s A Review of Accident Modelling Approaches for Complex Socio-Technical Systems covers the basics of the current thinking on systemic accident models: Hollnagel’s FRAM, Leveson’s STAMP, and Rassmussen’s framework are all worth reading about.

Also appropriate for further geeking out on failure and learning:

Hollnagel’s talk, On How (Not) To Learn From Accidents

Dekker’s wonderful Field Guide To Understanding Human Error

So the next time you read or hear a report with a singular root cause, alarms should go off in your head. In the same way that you shouldn’t ever have root cause “human error”, if you only have a single root cause, you haven’t dug deep enough. 🙂